A brand new examine has discovered that Fourier evaluation, a mathematical method that has been round for 200 years, can be utilized to disclose necessary details about how deep neural networks be taught to carry out complicated physics duties, comparable to local weather and turbulence modeling. This analysis highlights the potential of Fourier evaluation as a software for gaining insights into the inside workings of synthetic intelligence and will have important implications for the event of more practical machine studying algorithms.

Scientific AI’s “Black Field” Is No Match for 200-Yr-Outdated Technique

Fourier transformations reveal how deep neural community learns complicated physics.

One of many oldest instruments in computational physics — a 200-year-old mathematical method often known as Fourier evaluation — can reveal essential details about how a type of synthetic intelligence known as a deep neural community learns to carry out duties involving complicated physics like local weather and turbulence modeling, in keeping with a brand new examine.

The invention by mechanical engineering researchers at Rice College is described in an open-access examine revealed within the journal PNAS Nexus, a sister publication of the Proceedings of the Nationwide Academy of Sciences.

“That is the primary rigorous framework to clarify and information the usage of deep neural networks for complicated dynamical methods comparable to local weather,” mentioned examine corresponding writer Pedram Hassanzadeh. “It may considerably speed up the usage of scientific deep studying in local weather science, and result in far more dependable local weather change projections.”

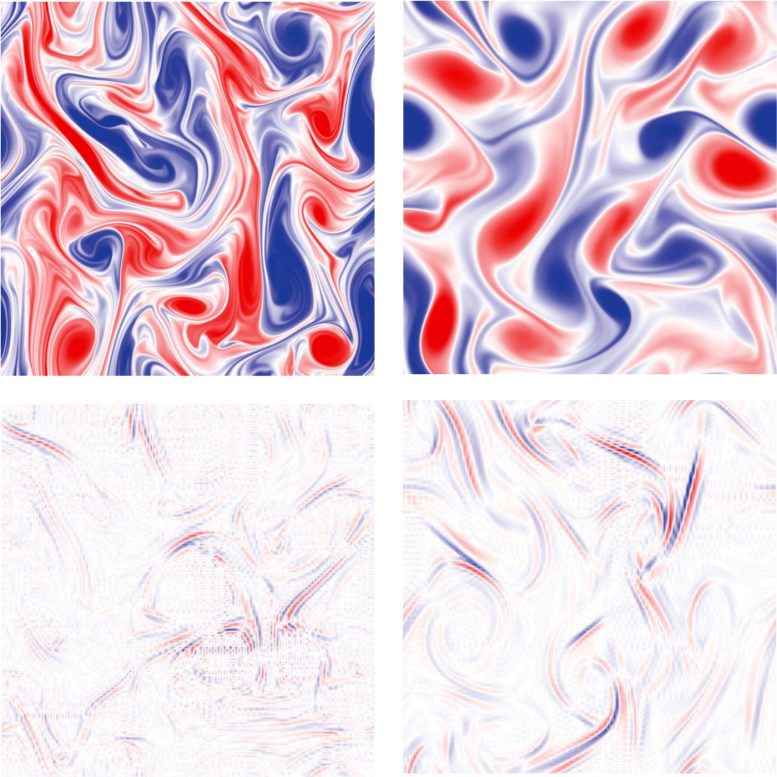

Rice College researchers skilled a type of synthetic intelligence known as a deep studying neural community to acknowledge complicated flows of air or water and predict how flows will change over time. This visible illustrates the substantial variations within the scale of options the mannequin is proven throughout coaching (prime) and the options it learns to acknowledge (backside) to make its predictions. Credit score: Picture courtesy of P. Hassanzadeh/Rice College

Within the paper, Hassanzadeh, Adam Subel and Ashesh Chattopadhyay, each former college students, and Yifei Guan, a postdoctoral analysis affiliate, detailed their use of Fourier evaluation to check a deep studying neural community that was skilled to acknowledge complicated flows of air within the environment or water within the ocean and to foretell how these flows would change over time. Their evaluation revealed “not solely what the neural community had discovered, it additionally enabled us to immediately join what the community had discovered to the physics of the complicated system it was modeling,” Hassanzadeh mentioned.

“Deep neural networks are infamously onerous to know and are sometimes thought-about ‘black bins,’” he mentioned. “That is without doubt one of the main considerations with utilizing deep neural networks in scientific purposes. The opposite is generalizability: These networks can't work for a system that's totally different from the one for which they had been skilled.”

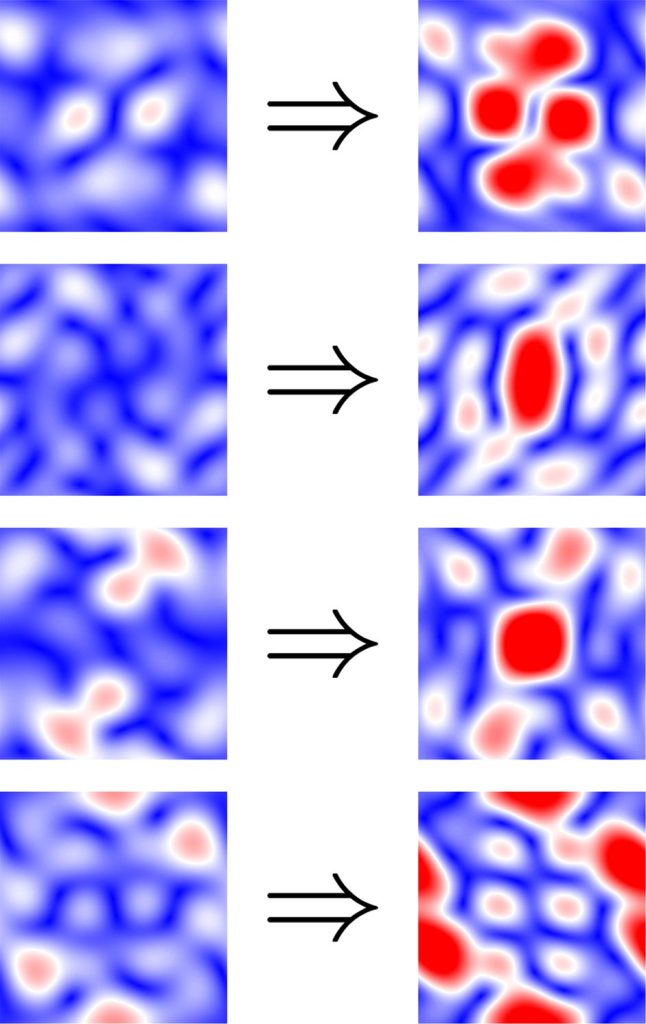

Coaching cutting-edge deep neural networks requires a substantial amount of information, and the burden for re-training, with present strategies, continues to be important. After coaching and re-training a deep studying community to carry out totally different duties involving complicated physics, Rice College researchers used Fourier evaluation to match all 40,000 kernels from the 2 iterations and located greater than 99% had been related. This illustration reveals the Fourier spectra of the 4 kernels that almost all differed earlier than (left) and after (proper) re-training. The findings reveal the strategy’s potential for figuring out extra environment friendly paths for re-training that require considerably much less information. Credit score: Picture courtesy of P. Hassanzadeh/Rice College

Hassanzadeh mentioned the analytic framework his workforce presents within the paper “opens up the black field, lets us look inside to know what the networks have discovered and why, and in addition lets us join that to the physics of the system that was discovered.”

Subel, the examine’s lead writer, started the analysis as a Rice undergraduate and is now a graduate scholar at New York College. He mentioned the framework may very well be utilized in mixture with strategies for switch studying to “allow generalization and in the end improve the trustworthiness of scientific deep studying.”

Whereas many prior research had tried to disclose how deep studying networks be taught to make predictions, Hassanzadeh mentioned he, Subel, Guan and Chattopadhyay selected to method the issue from a distinct perspective.

Pedram Hassanzadeh. Credit score: Rice Universit

“The frequent machine studying instruments for understanding neural networks haven't proven a lot success for pure and engineering system purposes, a minimum of such that the findings may very well be linked to the physics,” Hassanzadeh mentioned. “Our thought was, ‘Let’s do one thing totally different. Let’s use a software that’s frequent for finding out physics and apply it to the examine of a neural community that has discovered to do physics.”

He mentioned Fourier evaluation, which was first proposed within the 1820s, is a favourite strategy of physicists and mathematicians for figuring out frequency patterns in area and time.

“Individuals who do physics nearly all the time take a look at information within the Fourier area,” he mentioned. “It makes physics and math simpler.”

For instance, if somebody had a minute-by-minute file of out of doors temperature readings for a one-year interval, the knowledge could be a string of 525,600 numbers, a sort of information set physicists name a time collection. To investigate the time collection in Fourier area, a researcher would use trigonometry to rework every quantity within the collection, creating one other set of 525,600 numbers that might include data from the unique set however look fairly totally different.

“As a substitute of seeing temperature at each minute, you'd see just some spikes,” Subel mentioned. “One could be the cosine of 24 hours, which might be the day and night time cycle of highs and lows. That sign was there all alongside within the time collection, however Fourier evaluation lets you simply see these varieties of alerts in each time and area.”

Based mostly on this methodology, scientists have developed different instruments for time-frequency evaluation. For instance, low-pass transformations filter out background noise, and high-pass filters do the inverse, permitting one to concentrate on the background.

Adam Subel. Credit score: Rice College

Hassanzadeh’s workforce first carried out the Fourier transformation on the equation of its totally skilled deep-learning mannequin. Every of the mannequin’s roughly 1 million parameters act like multipliers, making use of roughly weight to particular operations within the equation throughout mannequin calculations. In an untrained mannequin, parameters have random values. These are adjusted and honed throughout coaching because the algorithm steadily learns to reach at predictions which are nearer and nearer to the recognized outcomes in coaching circumstances. Structurally, the mannequin parameters are grouped in some 40,000 five-by-five matrices, or kernels.

“After we took the Fourier remodel of the equation, that advised us we should always take a look at the Fourier remodel of those matrices,” Hassanzadeh mentioned. “We didn’t know that. No person has achieved this half ever earlier than, appeared on the Fourier transforms of those matrices and tried to attach them to the physics.

“And once we did that, it popped out that what the neural community is studying is a mix of low-pass filters, high-pass filters and Gabor filters,” he mentioned.

“The gorgeous factor about that is, the neural community isn't doing any magic,” Hassanzadeh mentioned. “It’s not doing something loopy. It’s really doing what a physicist or mathematician might need tried to do. After all, with out the facility of neural nets, we didn't know the right way to appropriately mix these filters. However once we discuss to physicists about this work, they find it irresistible. As a result of they're, like, ‘Oh! I do know what these items are. That is what the neural community has discovered. I see.’”

Subel mentioned the findings have necessary implications for scientific deep studying, and even recommend that some issues scientists have discovered from finding out machine studying in different contexts, like classification of static photographs, might not apply to scientific machine studying.

“We discovered that a few of the information and conclusions within the machine studying literature that had been obtained from work on business and medical purposes, for instance, don't apply to many essential purposes in science and engineering, comparable to local weather change modeling,” Subel mentioned. “This, by itself, is a significant implication.”

Reference: “Explaining the physics of switch studying in data-driven turbulence modeling” by Adam Subel, Yifei Guan, Ashesh Chattopadhyay and Pedram Hassanzadeh, 23 January 2023, PNAS Nexus.

DOI: 10.1093/pnasnexus/pgad015

Chattopadhyay acquired his Ph.D. in 2022 and is now a analysis scientist on the Palo Alto Analysis Middle.

The analysis was supported by the Workplace of Naval Analysis (N00014- 20-1-2722), the Nationwide Science Basis (2005123, 1748958) and the Schmidt Futures program. Computational assets had been offered by the Nationwide Science Basis (170020) and the Nationwide Middle for Atmospheric Analysis (URIC0004).

Post a Comment